If you’re seriously considering moving from an in-house server room to a colocation facility, you'll likely have some questions about power. What is a Volt-Ampere, you may be asking, and how is a VA rating different from a power rating in Watts?

You may also be wondering how to estimate your power requirements – do you just add up all the VA ratings on the labels of your gear? We’ll explore this question in a subsequent blog post, but for now, let’s dive in and demystify the Volt-Amp!

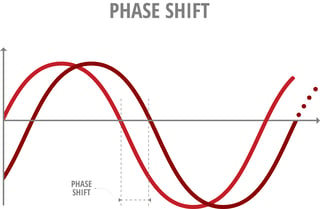

Out-of-Phase Waves

When dealing with Direct Current (DC) power, we would multiply the voltage by the current (in Amps) to derive the power (in Watts). But when we’re talking about Alternating Current, the situation is more complex because we’re dealing with waves.

In an AC system, if the voltage and current waveforms are perfectly in sync, then we can multiply voltage by current to derive power at any given instant, just as with DC. However, since AC waves are constantly changing amplitude we’d need to dust off a bit of calculus to find the RMS value, but that’s another story.

When the voltage and current waves are offset – or out of phase – there will be a negative component that will cancel out some of the “real” power that is available to do work (create heat, or flip bits in a computer’s CPU for example).

This is where Watts and Volt-Amps come into the picture. In an AC system, the actual real power that is available to perform work is measured in Watts. But the “apparent” power – that is, the power you would measure if you were to stick a voltmeter and ammeter on the power cable – is measured in Volt-Amps.

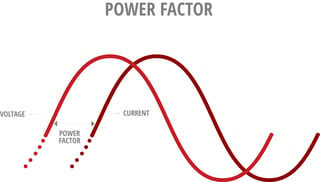

Power Factor

The ratio between real power (Watts) and apparent power (VA) is called power factor. When the voltage and current waves are perfectly in phase, real and apparent power are equal and all is well in the world; all the power being delivered to the device is used to produce some useful work. This represents a power factor of 1.0.

When the waves are offset, the power factor will decrease below unity. In the worst case, if the voltage and current waves are offset by 90 degrees, the power factor is zero. In this case, wires get hot because lots of current is sloshing through them and yet no actual work is being done – whatever is connected to the wires is doing nothing except storing power and releasing it a fraction of a second later. Sort of like taking a step forward and a step back and never moving.

Low power factors are bad since we need to size wires and circuit breakers to handle the higher currents that are being generated but not doing any useful work. This is particularly problematic for power utilities. If you look through the fine print of your utility bill, there is likely an extra charge buried there somewhere to account for a non-unity power factor.

In the past, computer power supplies typically had poor power factors. This meant that the facility wiring and circuit breakers had to be somewhat oversized relative to the actual power requirements of the machine. You can see this as a significant difference between the VA (Volt-Amp) and W (Watt) ratings on older equipment – the VA rating will be higher than the Watt rating.

Power supplies in modern IT gear (certainly anything built in the last 10 years) include power factor correction circuitry which ensures a near-unity power factor. These days, the VA and W ratings should be nearly equivalent. But when assessing power requirements, you should stick to the VA rating of the device if available.

-1.png?width=1092&height=792&name=logo%20(1092x792)-1.png)

%20copy(black%20letters).png?width=1092&height=792&name=logo%20(1092x792)%20copy(black%20letters).png)

.png?width=100&height=91&name=white%20logo%20(100x91).png)